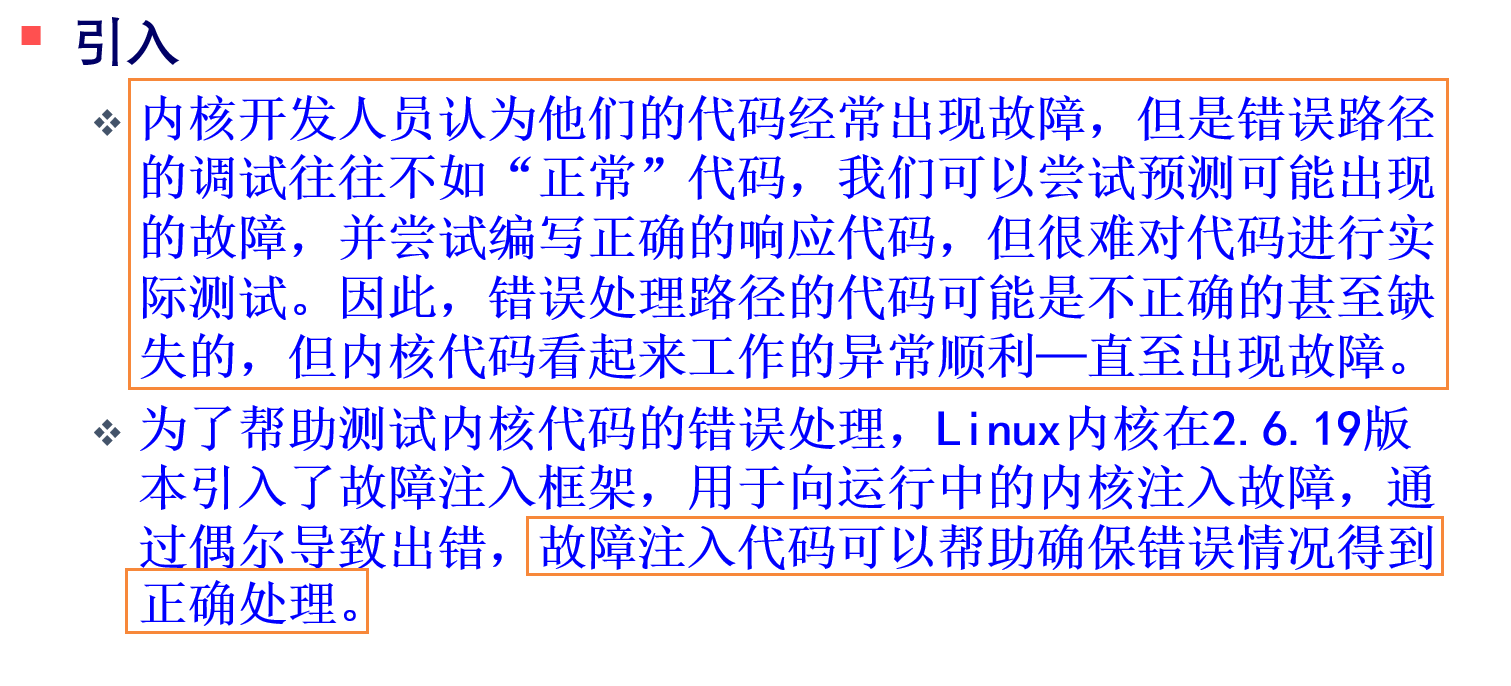

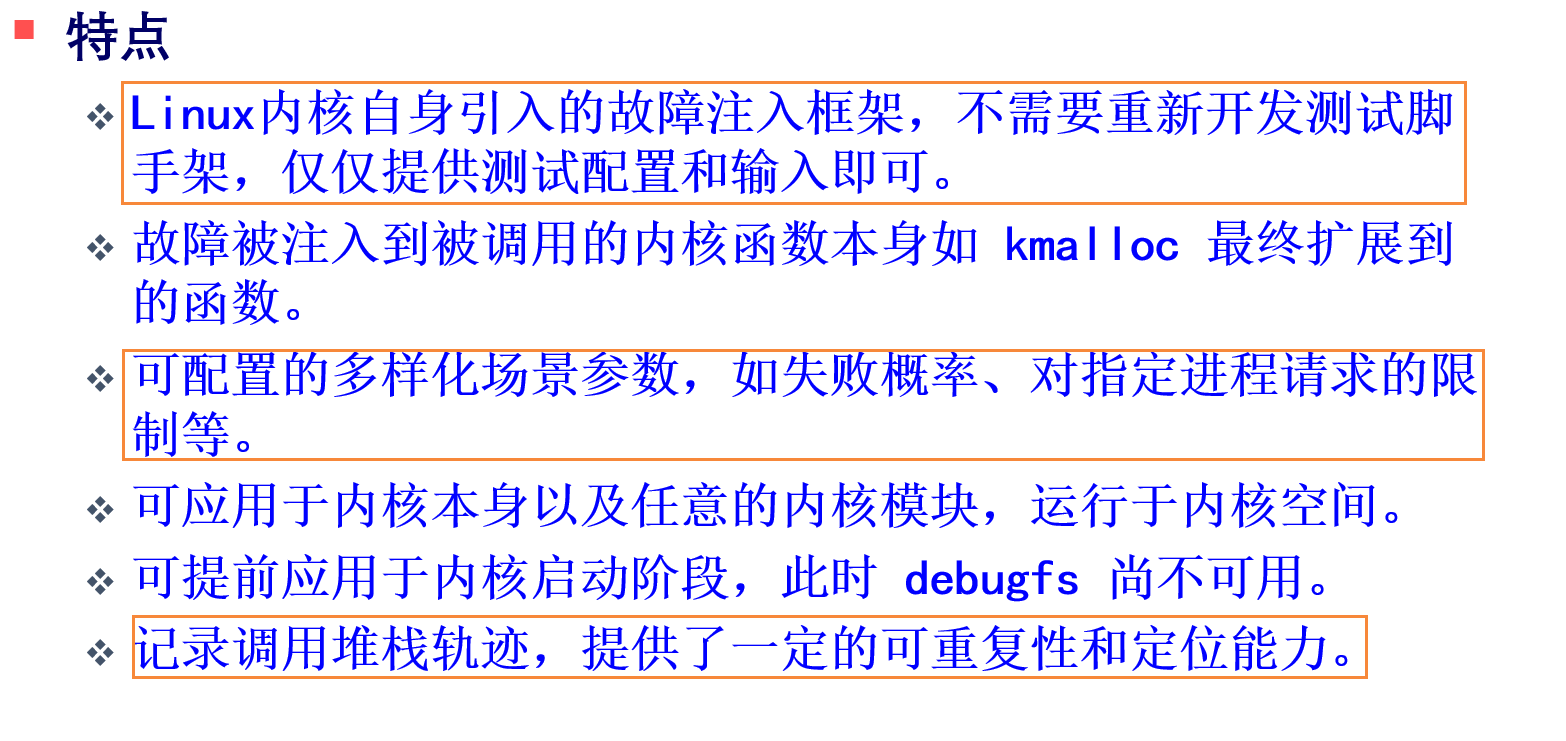

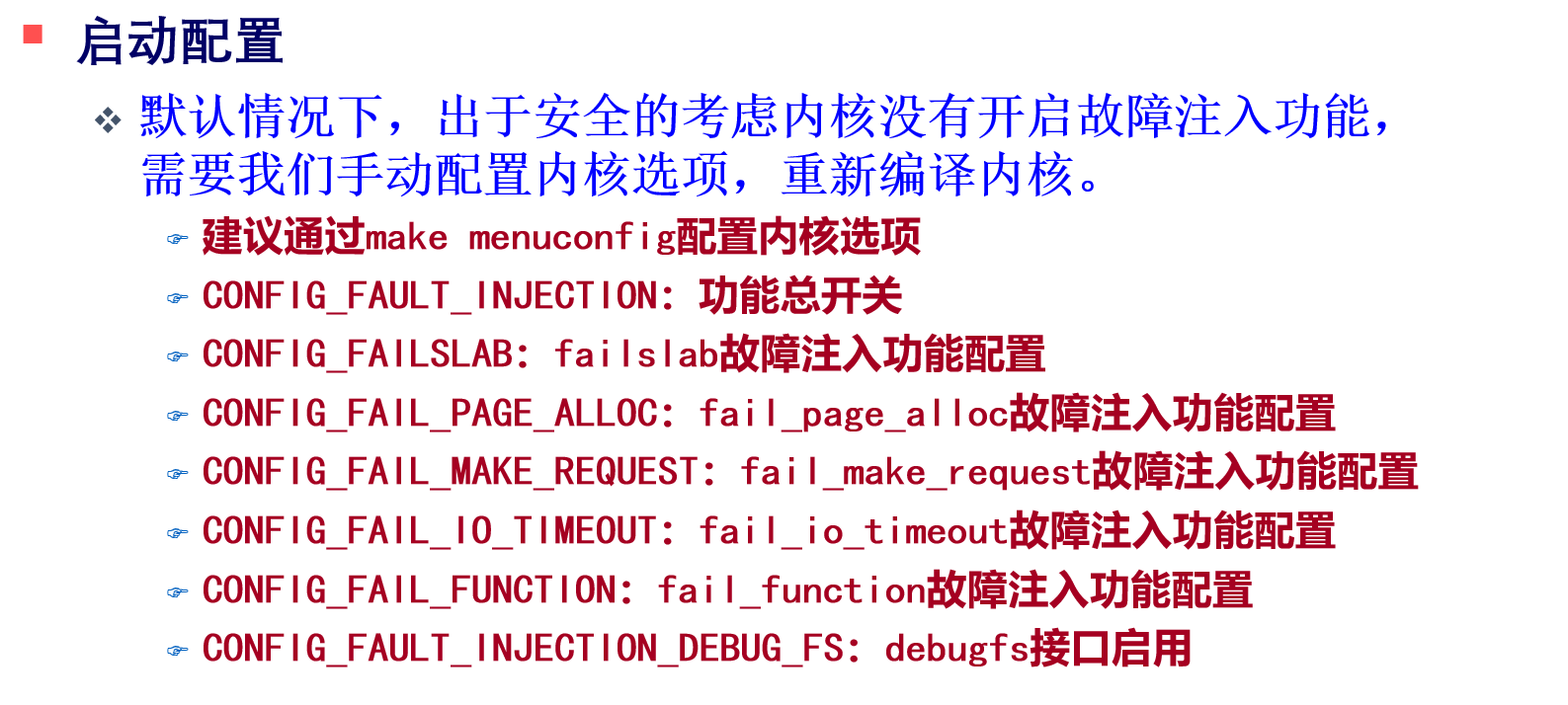

Linux Fault Injection原理

以fail_make_request故障注入类型为例,了解Linux的故障注入机制底层原理。

1.核心数据结构 include/linux/fault-inject.h中的fault_attr

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 #ifdef CONFIG_FAULT_INJECTION struct fault_attr { unsigned long probability; unsigned long interval; atomic_t times; atomic_t space; unsigned long verbose; bool task_filter; unsigned long stacktrace_depth; unsigned long require_start; unsigned long require_end; unsigned long reject_start; unsigned long reject_end; unsigned long count; struct ratelimit_state ratelimit_state ; struct dentry *dname ; }; #define FAULT_ATTR_INITIALIZER { \ .interval = 1, \ .times = ATOMIC_INIT(1), \ .require_end = ULONG_MAX, \ .stacktrace_depth = 32, \ .ratelimit_state = RATELIMIT_STATE_INIT_DISABLED, \ .verbose = 2, \ .dname = NULL, \ } #define DECLARE_FAULT_ATTR(name) struct fault_attr name = FAULT_ATTR_INITIALIZER int setup_fault_attr (struct fault_attr *attr, char *str) ;bool should_fail (struct fault_attr *attr, ssize_t size) ;#ifdef CONFIG_FAULT_INJECTION_DEBUG_FS struct dentry *fault_create_debugfs_attr (const char *name, struct dentry *parent, struct fault_attr *attr) ;#else static inline struct dentry *fault_create_debugfs_attr (const char *name, struct dentry *parent, struct fault_attr *attr) { return ERR_PTR(-ENODEV); } #endif #endif

该结构体是fault-injection实现的核心结构体,该结构体中的大多数字段都有一种似成相识的感觉,其实它们都对应到debugfs中的各个配置接口文件。最后的三个字段是用于功能实现控制用的,其中count用于统计故障注入点的执行次数,ratelimit_state用于日志输出频率控制,最后的dname表示故障的类型(即fail_make_request等)。

考虑这里的times和space为什么是atomic_t类型?ratelimit_state又是如何起到控制日志输出频率的作用的呢?

2.内核模块初始化 block/blk-core.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 #ifdef CONFIG_FAIL_MAKE_REQUEST static DECLARE_FAULT_ATTR (fail_make_request) ;static int __init setup_fail_make_request (char *str) { return setup_fault_attr(&fail_make_request, str); } __setup("fail_make_request=" , setup_fail_make_request); static bool should_fail_request (struct hd_struct *part, unsigned int bytes) { return part->make_it_fail && should_fail(&fail_make_request, bytes); } #else static inline bool should_fail_request (struct hd_struct *part, unsigned int bytes) { return false ; } #endif

代码中静态定义一个struct fault_attr结构体以实例化fail_make_request,用于描述fail_make_request类型故障注入,DECLARE_FAULT_ATTR是之前看过的宏定义。这里的__setup宏说明,在内核初始化阶段将处理“fail_make_request=xxx”的启动参数,注册的处理函数为setup_fail_make_request,它进一步调用通用函数setup_fault_attr,对fail_make_request结构体变量进一步初始化。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 int setup_fault_attr (struct fault_attr *attr, char *str) { unsigned long probability; unsigned long interval; int times; int space; if (sscanf (str, "%lu,%lu,%d,%d" , &interval, &probability, &space, ×) < 4 ) { printk(KERN_WARNING "FAULT_INJECTION: failed to parse arguments\n" ); return 0 ; } attr->probability = probability; attr->interval = interval; atomic_set (&attr->times, times); atomic_set (&attr->space, space); return 1 ; } EXPORT_SYMBOL_GPL(setup_fault_attr);

内核模块初始化阶段除了创建了故障注入的核心数据结构fail_make_request变量外,还启用了debugfs的配置接口文件,它会在debugfs的目录下创建一个名为fail_make_request的attr目录:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 #ifdef CONFIG_FAIL_MAKE_REQUEST static int __init fail_make_request_debugfs (void ) { struct dentry *dir ="fail_make_request" , NULL , &fail_make_request); return PTR_ERR_OR_ZERO(dir); } late_initcall(fail_make_request_debugfs); #endif

让我们看看fault_create_debugfs_attr函数如何创建故障注入目录及其子配置文件的:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 #ifdef CONFIG_FAULT_INJECTION_DEBUG_FS struct dentry *fault_create_debugfs_attr (const char *name, struct dentry *parent, struct fault_attr *attr) { umode_t mode = S_IFREG | S_IRUSR | S_IWUSR; struct dentry *dir ; dir = debugfs_create_dir(name, parent); if (IS_ERR(dir)) return dir; debugfs_create_ul("probability" , mode, dir, &attr->probability); debugfs_create_ul("interval" , mode, dir, &attr->interval); debugfs_create_atomic_t ("times" , mode, dir, &attr->times); debugfs_create_atomic_t ("space" , mode, dir, &attr->space); debugfs_create_ul("verbose" , mode, dir, &attr->verbose); debugfs_create_u32("verbose_ratelimit_interval_ms" , mode, dir, &attr->ratelimit_state.interval); debugfs_create_u32("verbose_ratelimit_burst" , mode, dir, &attr->ratelimit_state.burst); debugfs_create_bool("task-filter" , mode, dir, &attr->task_filter); #ifdef CONFIG_FAULT_INJECTION_STACKTRACE_FILTER debugfs_create_stacktrace_depth("stacktrace-depth" , mode, dir, &attr->stacktrace_depth); debugfs_create_ul("require-start" , mode, dir, &attr->require_start); debugfs_create_ul("require-end" , mode, dir, &attr->require_end); debugfs_create_ul("reject-start" , mode, dir, &attr->reject_start); debugfs_create_ul("reject-end" , mode, dir, &attr->reject_end); #endif attr->dname = dget(dir); return dir; } EXPORT_SYMBOL_GPL(fault_create_debugfs_attr); #endif

首先传入的parent为NULL,所以fail_make_request目录创建的点为degubfs的根目录,然后在该目录下依次创建probability、interval、times等等之前看到的属性配置文件,最后将目录的dentry保存到attr->dname字段中。

注意:我们实际对故障注入进行配置都是通过修改/sys/kernel/debugfs/fail_make_request/**等配置文件,通过文件操作指针,debugfs与fault_attr的字段关联起来,这会间接修改内核空间的fail_make_request结构体变量。

以debug_create_ul创建probability文件为例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 #ifdef CONFIG_FAULT_INJECTION_DEBUG_FS static int debugfs_ul_set (void *data, u64 val) { *(unsigned long *)data = val; return 0 ; } static int debugfs_ul_get (void *data, u64 *val) { *val = *(unsigned long *)data; return 0 ; } DEFINE_SIMPLE_ATTRIBUTE(fops_ul, debugfs_ul_get, debugfs_ul_set, "%llu\n" ); static void debugfs_create_ul (const char *name, umode_t mode, struct dentry *parent, unsigned long *value) { debugfs_create_file(name, mode, parent, value, &fops_ul); } #endif

但是stacktrace-depth文件比较特殊,虽然fault_attr结构体中该字段的类型也是unsigned long,但是因为该字段有最大值限制MAX_STACK_TRACE_DEPTH即32,因此创建stacktrace-depth文件需要使用debugfs_create_stacktrace_depth函数:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 #ifdef CONFIG_FAULT_INJECTION_DEBUG_FS #ifdef CONFIG_FAULT_INJECTION_STACKTRACE_FILTER static int debugfs_stacktrace_depth_set (void *data, u64 val) { *(unsigned long *)data = min_t (unsigned long , val, MAX_STACK_TRACE_DEPTH); return 0 ; } DEFINE_SIMPLE_ATTRIBUTE(fops_stacktrace_depth, debugfs_ul_get, debugfs_stacktrace_depth_set, "%llu\n" ); static void debugfs_create_stacktrace_depth (const char *name, umode_t mode, struct dentry *parent, unsigned long *value) { debugfs_create_file(name, mode, parent, value, &fops_stacktrace_depth); } #endif #endif

==可以看出,fops_stacktrace_depth与fops_ul之间的唯一差别就是set方法有所不同,前者需要进行最大值限制。==

3.块设备配置接口 block/partition-generic.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 #ifdef CONFIG_FAIL_MAKE_REQUEST static struct device_attribute dev_attr_fail = __ATTR(make-it-fail, 0644 , part_fail_show, part_fail_store); ssize_t part_fail_show (struct device *dev, struct device_attribute *attr, char *buf) { struct hd_struct *p = return sprintf (buf, "%d\n" , p->make_it_fail); } ssize_t part_fail_store (struct device *dev, struct device_attribute *attr, const char *buf, size_t count) { struct hd_struct *p = int i; if (count > 0 && sscanf (buf, "%d" , &i) > 0 ) p->make_it_fail = (i == 0 ) ? 0 : 1 ; return count; } #endif

基于sysfs的接口,当用户往/sys/block/sda/make-it-fail写入非0时,对应设备struct hd_struct的make_it_fail字段就被置位为1,开关就打开了。

4.IO故障流程

block/blk-core.c

1 2 3 4 5 6 7 8 9 10 11 12 13 static noinline int should_fail_bio (struct bio *bio) { if (should_fail_request(&bio->bi_disk->part0, bio->bi_iter.bi_size)) return -EIO; return 0 ; } ALLOW_ERROR_INJECTION(should_fail_bio, ERRNO); static bool should_fail_request (struct hd_struct *part, unsigned int bytes) { return part->make_it_fail && should_fail(&fail_make_request, bytes); }

在IO提交流程的should_fail_bio函数中会调用should_fail_request函数进行故障注入的判断,should_fail_request函数进而判断块设备故障开关make_it_fail是否开启,如果已开启则使用should_fail并根据传入的配置好的fail_make_request判断故障注入是否触发成功。

最终的故障注入判断逻辑如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 bool should_fail (struct fault_attr *attr, ssize_t size) { if (in_task()) { unsigned int fail_nth = READ_ONCE(current->fail_nth); if (fail_nth) { if (!WRITE_ONCE(current->fail_nth, fail_nth - 1 )) goto fail; return false ; } } if (attr->probability == 0 ) return false ; if (attr->task_filter && !fail_task(attr, current)) return false ; if (atomic_read (&attr->times) == 0 ) return false ; if (atomic_read (&attr->space) > size) { atomic_sub (size, &attr->space); return false ; } if (attr->interval > 1 ) { attr->count++; if (attr->count % attr->interval) return false ; } if (attr->probability <= prandom_u32() % 100 ) return false ; if (!fail_stacktrace(attr)) return false ; fail: fail_dump(attr); if (atomic_read (&attr->times) != -1 ) atomic_dec_not_zero(&attr->times); return true ; } EXPORT_SYMBOL_GPL(should_fail);

进程过滤选项:

1 2 3 4 5 static bool fail_task (struct fault_attr *attr, struct task_struct *task) { return in_task() && task->make_it_fail; }

stacktrace过滤选项:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 #ifdef CONFIG_FAULT_INJECTION_STACKTRACE_FILTER static bool fail_stacktrace (struct fault_attr *attr) { int depth = attr->stacktrace_depth; unsigned long entries[MAX_STACK_TRACE_DEPTH]; int n, nr_entries; bool found = (attr->require_start == 0 && attr->require_end == ULONG_MAX); if (depth == 0 ) return found; nr_entries = stack_trace_save(entries, depth, 1 ); for (n = 0 ; n < nr_entries; n++) { if (attr->reject_start <= entries[n] && entries[n] < attr->reject_end) return false ; if (attr->require_start <= entries[n] && entries[n] < attr->require_end) found = true ; } return found; } #else static inline bool fail_stacktrace (struct fault_attr *attr) { return true ; } #endif

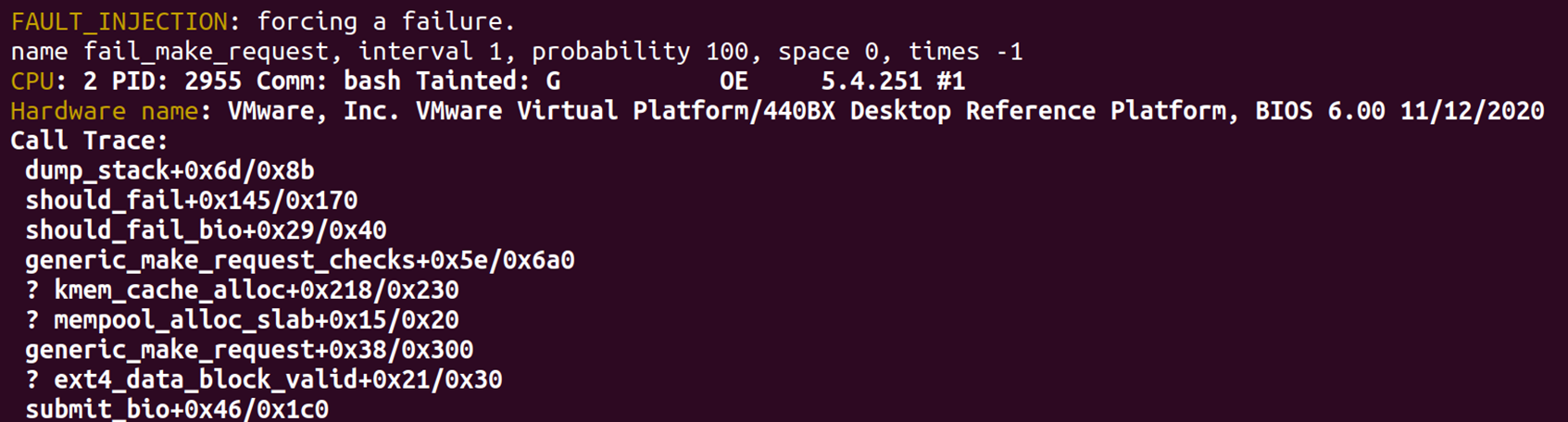

打印故障注入消息:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 static void fail_dump (struct fault_attr *attr) { if (attr->verbose > 0 && __ratelimit(&attr->ratelimit_state)) { printk(KERN_NOTICE "FAULT_INJECTION: forcing a failure.\n" "name %pd, interval %lu, probability %lu, " "space %d, times %d\n" , attr->dname, attr->interval, attr->probability, atomic_read (&attr->space), atomic_read (&attr->times)); if (attr->verbose > 1 ) dump_stack(); } }

5.日志输出频率限制 include/linux/ratelimit.h

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 #define DEFAULT_RATELIMIT_INTERVAL (5 * HZ) #define DEFAULT_RATELIMIT_BURST 10 #define RATELIMIT_MSG_ON_RELEASE BIT(0) struct ratelimit_state { raw_spinlock_t lock; int interval; int burst; int printed; int missed; unsigned long begin; unsigned long flags; }; #define RATELIMIT_STATE_INIT_FLAGS(name, interval_init, burst_init, flags_init) { \ .lock = __RAW_SPIN_LOCK_UNLOCKED(name.lock), \ .interval = interval_init, \ .burst = burst_init, \ .flags = flags_init, \ } #define RATELIMIT_STATE_INIT(name, interval_init, burst_init) \ RATELIMIT_STATE_INIT_FLAGS(name, interval_init, burst_init, 0) #define RATELIMIT_STATE_INIT_DISABLED \ RATELIMIT_STATE_INIT(ratelimit_state, 0, DEFAULT_RATELIMIT_BURST)

lib/ratelimit.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 int ___ratelimit(struct ratelimit_state *rs, const char *func){ int interval = READ_ONCE(rs->interval); int burst = READ_ONCE(rs->burst); unsigned long flags; int ret; if (!interval) return 1 ; if (!raw_spin_trylock_irqsave(&rs->lock, flags)) return 0 ; if (!rs->begin) rs->begin = jiffies; if (time_is_before_jiffies(rs->begin + interval)) { if (rs->missed) { if (!(rs->flags & RATELIMIT_MSG_ON_RELEASE)) { printk_deferred(KERN_WARNING "%s: %d callbacks suppressed\n" , func, rs->missed); rs->missed = 0 ; } } rs->begin = jiffies; rs->printed = 0 ; } if (burst && burst > rs->printed) { rs->printed++; ret = 1 ; } else { rs->missed++; ret = 0 ; } raw_spin_unlock_irqrestore(&rs->lock, flags); return ret; } EXPORT_SYMBOL(___ratelimit);

6.总结思考 Linux Fault Injection是Linux新增的一个系统能力,为内核开发人员提供了代码健壮性检查的故障注入能力,整体思想就是预留有默认实现关键扩展点如should_fail函数(默认返回false)等,通过内核编译选项对应的宏定义编译不同行为的扩展点,同时方便快速修正和测试故障注入行为提供用户态下的DebugFS(文件操作指针与内核态中的故障注入属性绑定),可选的配置项有注入概率、间隔、最大次数、进程过滤、容量限制、内存区间、日志级别和频率。